Alana Scotchmer

Partner

Article

13

Recently, the Office of the Superintendent of Financial Institutions ("OSFI") launched a consultation on its draft Guideline E-23: Model Risk Management ("Draft Guideline E-23"), as announced in OSFI's Annual Risk Outlook for 2022-2023. This consultation is part of OSFI's response to financial institutions facing an evolving risk environment because of the rapid growth of emerging technologies in the financial ecosystem. The consultation on Draft Guideline E-23 is an example of OSFI's recent focus on non-financial risks, and the increasing complexity of Canada's regulatory landscape. The regulation of models intersects with many other trending topics of regulation, including technology risk, privacy and data protection, and third-party risk management. The seemingly limitless potential of models will almost certainly be tempered by the growing burden of related regulatory compliance.

We anticipate that this consultation will be scrutinized closely by both participants in and observers of Canada's financial system since it addresses the use of artificial intelligence / machine learning ("AI/ML") methods by federally regulated entities in their models when processing and analyzing data. On May 20, 2022, OSFI published a summary of initial proposed revisions to Guideline E-23 in a letter to all federally regulated financial institutions and federally regulated pension plans, which put the industry on notice that the scope and requirements of Guideline E-23 would be changing. OSFI released Draft Guideline E-23 on Nov. 20, 2023, nearly one year following its initially scheduled release date in Q1 2023.

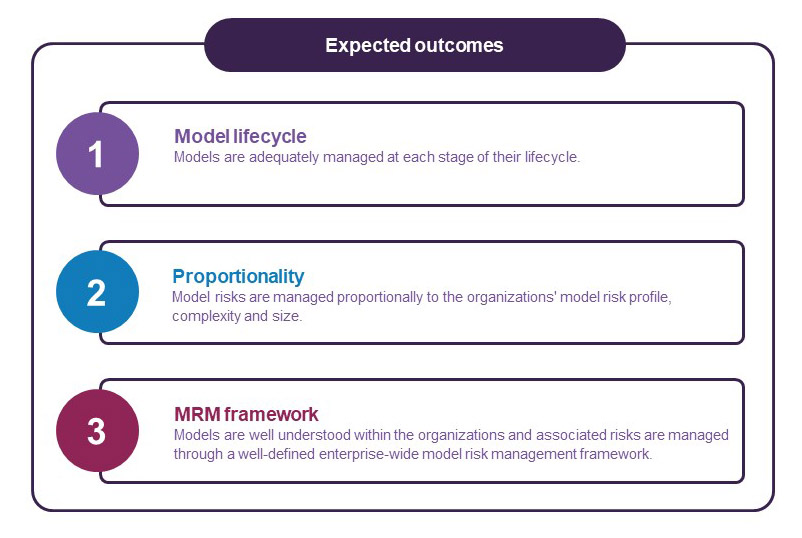

The most notable feature of Draft Guideline E-23 is that the scope of the guideline has expanded to capture all federally regulated financial institutions ("FRFIs"), including both deposit-taking institutions ("DTIs") and federally regulated insurance companies, and federally regulated private pension plans ("FRPPs"). The current Guideline E-23 applies to banks, bank holding companies, federally regulated trust and loan companies and cooperative retail associations. Draft Guideline E-23 sets out the following expected outcomes for organizations to achieve:

In keeping with other recent OSFI guidelines, Draft Guideline E-23 applies a principles-based approach to regulation of model risk and the principle of proportionality. In theory, principles-based regulation and proportionality allow OSFI flexibility in applying regulatory requirements to account for differences in risk profile, complexity and size among regulated entities.

Draft Guideline E-23 defines model as follows:

"The application of theoretical, empirical, judgmental assumptions and/or statistical techniques, including AI/ML methods, which processes input data to generate results. A model has three distinct components:

OSFI defines model broadly, and this definition of model can capture aspects of digitization, advanced analytics and AI/ML. In contrast, OSFI defines model risk narrowly as follows:

"The risk of adverse financial (e.g., inadequate capital, financial losses, inadequate liquidity, underfunding of defined benefit pension plans), operational, and/or reputational consequences arising from flaws or limitations in the design, development, implementation, and/or use of a model. Model risk can originate from, among other things, inappropriate specification, incorrect parameter estimates, flawed hypotheses and/or assumptions, mathematical computation errors, inaccurate/inappropriate/incomplete data, improper or unintended usage, and inadequate monitoring and/or controls."

Perhaps unsurprisingly, OSFI's proposed definition of model risk is focused on the potential negative outcomes of regulated entities relying on models. This is at odds with the modern conceptions of enterprise risk management, in which organizations seek to exploit the possibility of positive outcomes in addition to mitigating potential adverse effects.

OSFI acknowledges that FRFIs and FRPPs rely on models to make business decisions based on data and calculations. Models are becoming more common and complex in the financial services sector. This is particularly true for AI/ML, which can learn from being trained on large data sets to make predictions or generate recommendations for decision making. One of the major concerns when deploying these models, particularly in the case of any generative AI-based models, is the errors or limitations that models may have that can cause problems for organizations. These errors and limitations can cause operational losses and reputational damage. As such, Draft Guideline E-23 recommends organizations prioritize the mitigation of model risks through the implementation of rigorous model risk management ("MRM") practices and oversight, incorporating sufficient controls by having a comprehensive understanding of the model lifecycle and establishing a robust MRM framework.

OSFI recognized in its 2020 Developing Financial Sector Resilience in a Digital World discussion paper that the ambiguity in defining a "model" within the AI/ML context and that organizations may not categorize all AI/ML methods as models. Regardless of any internal classification, OSFI is of the view that appropriate governance and controls must always accompany the use of AI/ML, whether or not it meets the definition of a model. OSFI recently released a voluntary questionnaire for FRFIs on their use of AI/ML and quantum computing jointly with the Financial Consumer Agency of Canada. OSFI plans to analyze the results of this questionnaire after Feb. 19, 2024 and then share current practices with participating institutions.

OSFI's inquiries into the regulation of AI/ML have focused on the "EDGE" principles: explainability, data, governance and ethics. In the spring of 2023, OSFI partnered with the Global Risk Institute to convene the Financial Industry Forum on Artificial Intelligence ("FIFAI"), a community of AI thought leaders. FIFAI released subsequently a report entitled A Canadian Perspective on Responsible AI, which explored the application of the EDGE principles in regulating the use of AI in the Canadian financial sector. The work of FIFAI built upon OSFI's 2020 report Developing Financial Sector Resilience in a Digital World.

OSFI's work on the regulation of AI/ML is set against the backdrop of the development of AI-specific legislation and regulation in Canada, which also incorporates the EDGE principles. The currently in-progress Bill C-27 proposes to enact the Artificial Intelligence and Data Act ("AIDA") as part of a data legal framework to regulate AI systems more broadly in Canada. This proposed legislation would set new rules for AI products and systems, imposing regulatory requirements for both AI systems generally and those AI systems referred to as "high-impact systems." The financial services sector is anticipated to be required to comply with this legislation. Notably, Bill C-27 has successfully passed its second reading and was subsequently referred to the House Standing Committee on Industry, Science and Technology (the "Committee").

On Nov. 28, 2023, Minister of Innovation, Science, and Industry François-Philippe Champagne presented the Committee with the government's proposed amendments to AIDA. The proposed amendments set out seven initial classes of high-impact systems, one of which pertains to the provision of services. This class encompasses AI systems used to: (a) determine whether to provide the services to individuals, (b) ascertain the type or cost of the service to be provided to the individual, and (c) prioritize service to individuals. The proposed amendments also set out requirements for an accountability framework. We will have to wait to see how the Committee's study is affected by the proposed amendments and AIDA's impact on the industry as the legislation and regulations develop.

Recognizing the ambiguity of the application of AIDA in those AI systems that will be subject to the legislation, Innovation, Science and Economic Development Canada, a federal government department, published the Voluntary Code of Conduct on the Responsible Development and Management of Advanced Generative AI Systems ("Code of Conduct") in September 2023. For more on the Code of Conduct, see Gowling WLG's article Canada publishes Voluntary Code of Conduct on generative AI systems. The Code of Conduct identifies the measures organizations have to undertake when developing and managing generative AI systems; both AIDA and the Code of Conduct incorporate aspects of the EDGE principles.

In addition, Quebec's financial sector regulator, the Autorité des Marchés Financiers ("AMF") published a report in November 2021 entitled Artificial intelligence in finance: Recommendations for its responsible use, which also incorporates elements of the EDGE principles. The AMF's interest in issuing a report on the use of AI suggests that provincial regulators may also be looking to enter the fray of AI regulation.

These developments suggest that the future may hold multiple parallel AI regulatory regimes that would apply to federally regulated entities and their business activities within the provinces and territories in Canada. With thought leadership focusing on incorporating the EDGE principles so far, it seems likely that this will result in at least some measure of regulatory duplication, unless federally regulated entities are specifically excluded from other levels of regulation. This increased regulatory burden may have the potential to slow the use of models, including AI/ML, by smaller- or medium-sized regulated entities in particular, unless regulators apply the principle of proportionality thoughtfully.

Draft Guideline E-23 introduces a definition for "model lifecycle," which comprises all steps for operating, governing and maintaining a model, until it is decommissioned.

We see particular emphasis on the risks arising from data leveraged to develop the model; for such purposes, the data has to be relevant, complete, timely, traceable, and free from error so the data is "cleansed" for the development of the model. There should be a validation process, approval throughout a model's lifecycle, deployment of the model in collaboration with all parties involved in the model, and ongoing monitoring.

Draft Guideline E-23 sets out seven principles, which are summarized in the following table.

OSFI seeks input from stakeholders and invites the industry and the public to comment on Draft Guideline E-23. OSFI's consultation on Draft Guideline E-23 will remain open until March 22, 2024. In addition, OSFI will host an industry information session on Jan. 17, 2024. Currently, OSFI anticipates that the final guideline will take effect on July 1, 2025.

We will continue monitoring developments from OSFI, including with respect to Draft Guideline E-23. Our financial services regulatory professionals are available to assist stakeholders in the consultation process and advise any concerns or questions about implementing either guideline.

For any questions you may have about this topic, please contact one of the authors or members of our Financial Services Regulation Group.

NOT LEGAL ADVICE. Information made available on this website in any form is for information purposes only. It is not, and should not be taken as, legal advice. You should not rely on, or take or fail to take any action based upon this information. Never disregard professional legal advice or delay in seeking legal advice because of something you have read on this website. Gowling WLG professionals will be pleased to discuss resolutions to specific legal concerns you may have.